What are Interaction Effects?

An interaction effect occurs when the effect of one variable depends on the value of another variable. Interaction effects are common in regression models, ANOVA, and designed experiments. In this post, I explain interaction effects, the interaction effect test, how to interpret interaction models, and describe the problems you can face if you don’t include them in your model.

In any study, whether it’s a taste test or a manufacturing process, many variables can affect the outcome. Changing these variables can affect the outcome directly. For instance, changing the food condiment in a taste test can affect the overall enjoyment. In this manner, analysts use models to assess the relationship between each independent variable and the dependent variable. This kind of an effect is called a main effect. While main effects are relatively straightforward, it can be a mistake to assess only main effects.

In more complex study areas, the independent variables might interact with each other. Interaction effects indicate that a third variable influences the relationship between an independent and dependent variable. In this situation, statisticians say that these variables interact because the relationship between an independent and dependent variable changes depending on the value of a third variable. This type of effect makes the model more complex, but if the real world behaves this way, it is critical to incorporate it in your model. For example, the relationship between condiments and enjoyment probably depends on the type of food—as we’ll see in this post!

Example of Interaction Effects with Categorical Independent Variables

I think of interaction effects as an “it depends” effect. You’ll see why! Let’s start with an intuitive example to help you understand these effects in an interaction model conceptually.

Imagine that we are conducting a taste test to determine which food condiment produces the highest enjoyment. We’ll perform a two-way ANOVA where our dependent variable is Enjoyment. Our two independent variables are both categorical variables: Food and Condiment.

Our ANOVA model with the interaction term is:

Satisfaction = Food Condiment Food*Condiment

To keep things simple, we’ll include only two foods (ice cream and hot dogs) and two condiments (chocolate sauce and mustard) in our analysis.

Given the specifics of the example, an interaction effect would not be surprising. If someone asks you, “Do you prefer ketchup or chocolate sauce on your food?” Undoubtedly, you will respond, “It depends on the type of food!” That’s the “it depends” nature of an interaction effect. You cannot answer the question without knowing more information about the other variable in the interaction term—which is the type of food in our example!

Given the specifics of the example, an interaction effect would not be surprising. If someone asks you, “Do you prefer ketchup or chocolate sauce on your food?” Undoubtedly, you will respond, “It depends on the type of food!” That’s the “it depends” nature of an interaction effect. You cannot answer the question without knowing more information about the other variable in the interaction term—which is the type of food in our example!

That’s the concept. Now, I’ll show you how to include an interaction term in your model and how to interpret the results.

How to Interpret Interaction Effects

Let’s perform our analysis. All statistical software allow you to add interaction terms in a model. Download the CSV data file to try it yourself: Interactions_Categorical.

Let’s perform our analysis. All statistical software allow you to add interaction terms in a model. Download the CSV data file to try it yourself: Interactions_Categorical.

Use the p-value for an interaction term to test its significance. In the output below, the circled p-value tells us that the interaction effect test (Food*Condiment) is statistically significant. Consequently, we know that the satisfaction you derive from the condiment depends on the type of food.

But how do we interpret the interaction in a model and truly understand what the data are saying? The best way to understand these effects is with a special type of line chart—an interaction plot. This type of plot displays the fitted values of the dependent variable on the y-axis while the x-axis shows the values of the first independent variable. Meanwhile, the various lines represent values of the second independent variable.

On an interaction plot, parallel lines indicate that there is no interaction effect while different slopes suggest that one might be present. Below is the plot for Food*Condiment.

The crossed lines on the graph suggest that there is an interaction effect, which the significant p-value for the Food*Condiment term confirms. The graph shows that enjoyment levels are higher for chocolate sauce when the food is ice cream. Conversely, satisfaction levels are higher for mustard when the food is a hot dog. If you put mustard on ice cream or chocolate sauce on hot dogs, you won’t be happy!

Which condiment is best? It depends on the type of food, and we’ve used statistics to demonstrate this effect.

Overlooking Interaction Effects is Dangerous!

When you have statistically significant interaction effects, you can’t interpret the main effects without considering the interactions. In the previous example, you can’t answer the question about which condiment is better without knowing the type of food. Again, “it depends.”

Suppose we want to maximize satisfaction by choosing the best food and the best condiment. However, imagine that we forgot to include the interaction effect and assessed only the main effects. We’ll make our decision based on the main effects plots below.

Based on these plots, we’d choose hot dogs with chocolate sauce because they each produce higher enjoyment. That’s not a good choice despite what the main effects show! When you have statistically significant interactions, you cannot interpret the main effect without considering the interaction effects.

Given the intentionally intuitive nature of our silly example, the consequence of disregarding the interaction effect is evident at a passing glance. However, that is not always the case, as you’ll see in the next example.

Example of an Interaction Effect with Continuous Independent Variables

For our next example, we’ll assess continuous independent variables in a regression model for a manufacturing process. The independent variables (processing time, temperature, and pressure) affect the dependent variable (product strength). Here’s the CSV data file if you want to try it yourself: Interactions_Continuous. To learn how to recreate the continuous interaction plot using Excel, download this Excel file: Continuous Interaction Excel.

In the interaction model, I’ll include temperature*pressure as an interaction effect. The results are below.

As you can see, the interaction effect test is statistically significant. But how do you interpret the interaction coefficient in the regression equation? You could try entering values into the regression equation and piece things together. However, it is much easier to use interaction plots!

Related post: How to Interpret Regression Coefficients and Their P-values for Main Effects

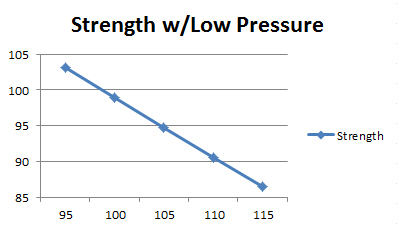

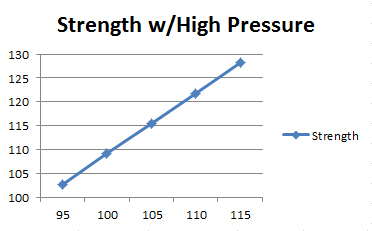

In the graph above, the variables are continuous rather than categorical. To produce the plot, the statistical software chooses a high value and a low value for pressure and enters them into the equation along with the range of values for temperature.

As you can see, the relationship between temperature and strength changes direction based on the pressure. For high pressures, there is a positive relationship between temperature and strength while for low pressures it is a negative relationship. By including the interaction term in the model, you can capture relationships that change based on the value of another variable.

If you want to maximize product strength and someone asks you if the process should use a high or low temperature, you’d have to respond, “It depends.” In this case, it depends on the pressure. You cannot answer the question about temperature without knowing the pressure value.

Important Considerations for Interaction Effects

While the plots help you interpret the interaction effects, use a hypothesis test to determine whether the effect is statistically significant. Plots can display non-parallel lines that represent random sampling error rather than an actual effect. P-values and hypothesis tests help you sort out the real effects from the noise.

The examples in this post are two-way interactions because there are two independent variables in each term (Food*Condiment and Temperature*Pressure). It’s equally valid to interpret these effects in two ways. For example, the relationship between:

- Satisfaction and Condiment depends on Food.

- Satisfaction and Food depends on Condiment.

You can have higher-order interactions. For example, a three-way interaction has three variables in the term, such as Food*Condiment*X. In this case, the relationship between Satisfaction and Condiment depends on both Food and X. However, this type of effect is challenging to interpret. In practice, analysts use them infrequently. However, in some models, they might be necessary to provide an adequate fit.

Finally, when an interaction effect test is statistically significant, do not attempt to interpret the main effects without considering the interaction effects. As the examples show, you will draw the wrong the conclusions!

If you’re learning regression and like the approach I use in my blog, check out my Intuitive Guide to Regression Analysis book! You can find it on Amazon and other retailers.

Hi Jim, great examples and very helpful, especially to my students! This is maybe an obvious question, but I find myself overthinking it and not finding a clear answer elsewhere online, so I wanted to ask – I’m using some interaction terms in SEM/path analysis (both variables are continuous, so it makes more sense than a multi-group analysis, and all variables are observed, b/c I know AMOS won’t do interaction terms of latent variables). I’ve created the interaction terms using centering, but conceptually, it still seems like it would make sense to correlate the error of the centered interaction term with the errors of each of its component variables? But is this correct? So in other words, if I am testing an interaction between depression and age and their joint influence on marital satisfaction (not my actual variables, making these up), and I then create an interaction term by multiplying centered versions of depression and age and specifying a path from that interaction term to marital satisfaction, as well as from uncentered depression to MS and uncentered age to MS, should I also correlate the error of the interaction term with the errors of depression and age? Or is that inappropriate once I’ve centered the variables to create the interaction term? Thanks!

Thank you for your blog. Should I remove a non-significant interaction term between row and column factors while doing a two-way ANOVA analysis and only keep the row and column main effects?

Hi Dave,

Yes, that’s generally the standard approach. An exception would be if you have strong theoretical reasons or previous research that suggests that the interaction effect exists despite the non-significant results.

Hi Jim,

I liked your blog about interactions. I am working with the interaction of two dummy variables and want to interpret its results. Can you please guide me how to do it?

Hi Rajni

In this post, pay particular attention to the mustard and chocolate sauce example. Those are two dummy variables and I work through the interpretation of their interaction effect.

I think part of the answer also lies in the standard error. I was also scratching my head over one of the main effects becoming insignificant (while the interaction was also insignificant), but then I saw the standard error had almost doubled.

That might be due to multicollinearity. Fitting an interaction term increases that. Try centering the variables to see if that helps. Read my post about multicollinearity for more details about this method!

Dear Jim,

Many thanks for your posts. I have a question regarding my results. The regression analysis shows that the coefficient of the interaction term is statistically significant. However, when I plot the lines, the graph shows no interaction effect. How should I interpret my result? Should I rely on the regression result or the graph?

Thank you

Saltanat

Hi Saltanat,

Typically, you’d expect to see either crossing lines or at least obviously non-parallel lines when you have a significant interaction effect. However, there are several possibilities for your case.

Keep in mind that the statistical test for an interaction effect evaluates whether the difference between the slopes is statistically significant. The fact that your interaction effect is significant indicates that the different between the lines is significant whether you see that on the graph or not. Consider, the following possibilities:

Perhaps your model has very high statistical power and can detect trivial differences between the lines. The lines are just barely non-parallel. This might happen if you have a large sample size and/or very low noise data. If this is true, your interaction effect might be statistically significant but probably aren’t practically significant.

Another possibility is the scaling for your graphs might be hiding a difference. That’s probably unlikely but at least worth considering. Perhaps the scaling is too zoomed out?

One thing to do is evaluate the predicted DV values for specific IVs for a model with and without the interaction effect. Do those predicted values change much? If they don’t change much, the interaction effect is not practically significant.

I hope that helps!

Good evening Mr. Frost. Can an interaction effect be found if there are only two variables: tslfest and agegp3?

Hi Candy,

Yes, assuming those two variables are independent variables in a model, it’s entirely possible they have an interaction effect. Two is the minimum number of independent variables that can have an interaction effect. I don’t know about those two specific variables, but it is at least possible.

Thanks for the article, really helpful. Just a question that I have in mind, when we run repeated measures two way anova and find out there is an interaction effect. Is it advisable to run one way anova on each factor to find out the simple effects? In my experiment, I’m using ARTool to trasnform ordinal data so that I could use anova on them https://depts.washington.edu/acelab/proj/art/. The tool documentation mentioend about using IV1*IV2 for pairwise comparison. But what if I want to use one way anova provided by the tool as well to find simple effects? Problem is each apporach generate different results 🙂

Hi Omar,

I’ve only taken a quick look at this tool, but it looks like a good one!

In general, when you have significant interaction effects, you don’t want to focus too much on only the main effects. In this context, the main effects can lead you astray! I show an example of that in this blog post. You really need to understand the interaction effects because in some cases they drastically change your understanding of the main effects. For this reason, whenever I’ve had significant interactions and needed to do pairwise comparisons, I’ve always included the interaction in those comparisons as the tool’s documentations suggests. (I’ve never used the tool but the principle should be the same.) Again, comparing main effects might lead you astray.

If you graph the main and interaction effects as I show in this post, you should be able to see whether the interaction effects significantly change your understanding of the model’s results.

I hope that helps!

How about when all of the two main variables that provide the main effect become statistically insignificant only after including the interaction term but were statistically significant before including the interaction term? And two models have similar residual plots.

Hi Elaine,

That can be a tricky situation. The question becomes, which model is better? The one with or without the interaction terms? It sounds like you could justify either model statistically. Here are some tips to consider.

If your IVs are continuous, center them to reduce multicollinearity in the model with interaction effect. Interaction terms jack up multicollinearity, which can reduce the power of the statistical tests. See if either of the main effects become significance after centering the variables and refitting the model. Click the link to learn more about that process.

If that doesn’t provide a clear answer (i.e., the main effects are still not significant), consider the following.

Is the R-squared and other goodness-of-fit measures notably better for one model or the other? While you don’t necessarily want to chase a high R-squared mindlessly, if one model does provide a better fit, that might help you decide.

Graph the interaction effect to see if it is strong. Perhaps it is statistically significant but practically not significant? I show what interaction plots look like in this post. If an interaction effect produces nearly parallel lines, it is fairly weak even if its p-value is significant.

Input values into the two models and see if it produces similar or different predicted values. It’s possible that despite the different forms of the model, they might be fairly equivalent.

Use subject-area knowledge to help you decide. Is the interaction effect theoretically justified? Evaluate other research and use your expertise to determine which model is better.

How do you interpret when one of the two main variables that provide the main effect become statistically insignificant only after including the interaction term but were statistically significant before including the interaction term?

Hi Simba,

When you include an interaction term in a regression model and observe that one of the main effects becomes statistically insignificant, it suggests that the effect of that variable is conditional on the level of the other variable. In other words, the effect of the main variable is not consistent across all levels of the other variable, and its significance is captured by the interaction term. That variable has no effect that is independent of the other variable.

However, there are several cautions about this interpretation. It’s possible that the main effect exists in the population but your regression analysis lacks sufficient power to detect it after including the interaction term. Remember, failing to reject the null hypothesis does not prove that the effect doesn’t exist. You can’t prove a negative. You just don’t have enough evidence so say that it does exist.

Also, you need to use your subject-area knowledge to theoretically evaluate the two models. Does it make more theoretical sense that the main effect or interaction effect exists? Or, perhaps theory suggests they both exist? Answering those questions will help you determine which model is correct. For more information on that topic, read Specifying the Correct Regression Model. Pay particular attention to the theory section near the end.

It’s always a good idea to plot the interaction to visualize and better understand the relationship.

I know that’s not a definitive answer but understanding those results and determining which model is best requires you to assess it theoretically. Also check those residual plots for each one!

it truly is!

This article was SO helpful, thank you!

Hi Hannah! I’m so glad to hear that it helpful! 🙂

Hi Jim,

Thank you for your helpful link.

I have an issue regarding the quadratic and linear terms in a model. I have studied insect population in many places and plants species. I collected insects randomly every week.

So the model (mixed effects) is as follows:

– Fixed factors (place and plant species),

-Sampling date as quadratic and linear terms

– and plant (from which the insects were collected) as a random effect.

My questions are about the interactions:

1- is it enough to include only binary interaction among those effects: place, plant species and date (quadratic and linear effect)?

2- should I consider quadratic and linear terms in three way interaction (place:plant species:date + place:plant species:date^2)?

I appreciate your help.

Hi Mohannas,

It’s possible to go overboard with higher-order interactions. They become very difficult to interpret. However, if they dramatically improve your model’s fit and are theoretically sound, you should consider add three-way interactions.

However, in practice, I’ve never seen a three-way interaction contribution much to the model. That’s not to say it can’t happen. But only add them if they really make sense theoretically and demonstrably improve your model’s fit.

Hello Jim, what happens when we have to deal with a variable that only has a value if another condition is met? Suppose we run a regression and we want to assess the impact of years of marriage, however this only applies to married people. Can we model the the problem the following way?

y ~ if.married+if.married*years.marriage

If so, how can I interpret the results of this? What does it mean to have a significant main term (if.married) but an insignificant interaction term? If the interaction is insignificant, but the main term is significant, can we just maintain the main term?

Thank you

Hi Jim,

Thank you for your help!

Honestly I didn’t fully understand the part of using post hoc tests.

If I add a categorical variable, with several levels, in a regression (I use R), the output already gives me the p-values, and coefficients, for each level, compared with the reference level. After that, I would run an ANOVA between a model with, and a model without the variable, to test if, overall, the categorical variable is significant or not. But, I got the idea that, what you are sugesting is a test to evaluate the differences among each combination of levels, instead of just the reference (output of a regression). Is that correct?

Hi Isa,

Read my post that I link to and learn about post hoc tests and the need to control the familywise error rate. Yes, you can get the p-values for the difference but with multiple groups there’s an increasing chance of a false positive (despite significant p-values).

There are different ways to assess the mean differences. One is the regression coefficients as you mention, which compares the means to the baseline level. Another is to compare each group’s mean to the other groups means to determine which groups are significantly different from each other. Because you were mentioning creating groups based on all the possible combinations, the all pairwise approach makes more sense than comparing to a baseline level.

But, either way, you should control the familywise error rate. Read my post for information about why that is so important. In particular, you described creating multiple groups for all the combinations and that’s when the familywise error rate really starts to grow!

Hello Jim,

Thanks for your article!

I have a question regarding interactions with binary variables. Considering the example you showed (Food+Condiment) is there any advantage in considering an interaction instead of modelling all possible combinations as a categorical variable?

My suggestion is: instead of doing Food*Condiment, we would create a categorical variable with the following levels: HotDog+Mustard; HotDog+Chocolate; IceCream+Chocolate; IceCream+Mustard. The results would show the difference between that certain level and the reference level. In my opinion this “all levels” approach seems easier to understand but is it correct?

Of course that for categorical variables with several levels (for example, several types of food and several condiments) this solution would be impractical, so I am just talking about two binary variables.

Hi Isa,

Conceptually, you’re correct that you could include in the model the groups based on the combinations of categorical levels. However, there are practical drawbacks for using that approach by itself. That method would require more observations because you need minimum number of observations per group. Additionally, because you’re comparing multiple means, you’d need to use a post hoc multiple comparison method that controls the familywise error rate, which reduces statistical power.

By including an interaction term, you’re directly testing the idea that the relationship between one IV and the DV depends on the value of another IV. You kind of get that with the group approach, but you’re not directly testing the interaction effect. Also, by including the interaction term rather than the groups, you don’t have the sample size problem associated with including more groups nor do you have the need for controlling the familywise error rate. Finally, using interaction plots (or group means displayed in a table), the results are no harder to interpret than using your method.

In practice, I’ve seen both methods used together. Statisticians will often include the interaction term and see if it is significant. If it is and they need to know if the differences between those group means are statistically significant, they can then perform the pairwise multiple comparisons on them. But usually it is worthwhile knowing whether the interaction term is significant, and then possibly determining whether any of the differences between group means are significant.

I’d see the two methods as complementary, but usually starting with the interaction term. I hope that helps!

Hi Jim!

Lots Thank you for explaining these concepts.

I have a question about interaction effect tests.

In my study, I had two groups: a smokers’ group with three categories (including heavy , medium, and light smoking) and a non-smokers’ group (as control )

to evaluate the interaction effect of smoking on the depednent contanous variable (platelet count).

What is the best statistical test that can be applied to determine the effect of smoking on platelet count? What is the best statistical test that can be applied to determine the relationship between smoking and platelet count?

Hi Zaid,

To have an interaction effect, you must have at least two independent variables (IV). Then you can say that the relationship between IV1 and the dependent variable (DV) depends on the value of IV2.

It appears like you only have one IV (smoking level) and you have four levels of that IV (non, light, medium, heavy). You can use one-way ANOVA to find the main effect of smoking on platelet count. That test will tell you if the differences between the groups mean platelet count are statistically significant. Just be aware that is a main effect and not an interaction effect.

Dear Jim, I have an important question on a matter I have to understand for a piece of research I’m conducting myself. You said enjoyment might depend on the Food*Condiment interaction, ok. What I don’t get is a point at a deeper level. Let’s suppose you want to do a repeated measure anova, because the same subject eats different types of foods with different condiments (which is the design of my own research) Let’s assume Food has three levels: sandwiches, pasta and meet and Condiment has two: ketchup and mayonnaise. The interaction might mean two things, among others, that is:

1) WITHIN the “sandwich” level, people prefer it with mayonnaise (higher enjoyment rate for mayonnaise) and not with ketchup. In this case, mayonnaise would show enjoyment rates which are higher in a statistical relevant way than ketchup WITHIN one of the levels of the other factor.

OR:

2) WITHIN “ketchup” level, people prefer to eat ketchup with pasta than with meet. In this other case, the comparison of the enjoyment means from the subjects is between food types and not condiment types.

I am stuck in this point, because the two things are different.

Thank you so much,

Vittorio

Hi Jim, I gain lot of guidance from your discussions on statistics. I am stuck in a concept of multiple regression. If you can guide me it will be a great help. Following is my concept of background working of multiple regression: When we have two independent variables, first, we get residuals of both independent variables by regressing both variables on each other. Then we perform simple regression using residuals of both independent variables against the dependent variable, separately. This step gives us one beta for residual of each variable which is essentially considered as beta of each independent variable in multiple regression. Is this concept true? If so, I can understand that residual of one independent variable is necessary to obtain in order to break the multicollinearity between both independent variables. However, is it fair to get and use residual of second independent variable as well? I tried my best to put question in right way. If you need me to make it more elaborated, I can give it another try. Your answer will be highly appreciated.

Sajid

Hi Muhammad,

When you have two independent variables, you only fit the model once and that model contains both IVs. Hence, even when you have two IVs, you still obtain only one set of residuals. You don’t fit separate models for each IV.

In the future, try to place you comment/question in a more appropriate post. It makes reading through the comments more relevant for each post. To find a closer match, use the search box near the upper right-hand margin of my website. Thanks!

Thank you very much Jim!

Hi Jim

I am having trouble interpreting my own results of a two-way repeated ANOVA and was wondering if you could help me out.

DV: negative affect

IV:sleep quality (good or bad)

IV:gender

I found a significant main effect of sleep quality and negative affect but no significant main effect of gender and negative affect. However, i did find a significant interaction between sleep quality and gender. What could you conclude from this or how would you interpret these results?

Dear mr. Frost,

I have a question regarding the interpretation of the repeated measures ANOVA. I conduct a part of my study to investigate the best way to identify the maximum voluntary contraction (MVC) in healthy subjects between 2 different protocols to measure the MVC (ref) and 4 different methods of determing the MVC (mean of the mean, mean of maximum, maximum of the mean and maximum of maximum). Therefore, in my analysis, I have 2 values of the 2 different protocols and values of 4 the conditions for each examined muscle.

I conducted in my analysis a repeated measures of ANOVA with a Bonferroni. On 1 particular muscle, the serratus anterior (SA), my results of the “Tests of Within-Subjects Effects” state that I have a significant interaction effect between the 4 conditions and the 2 values of the 2 different. So this means that I either have a difference in values between the 2 protocols but not for each condition i gues??

I got stuck with the interpretation of the interaction effects between this 2 types of factors.

Would it be possible for you to help me interpret the results?

Thank you in advance.

Kind regards,

Symon

Hi Jim!

Thank you so much for the quick reply.

When you say increasing the sample size by 10, is that per group or overall? E.g. if I have 20 participants and want to add the sex interaction term, if I have 10 males and 10 females, do I increase the sample size to 30 or 40?

Thank you!

Eva

Hi Eva,

You’re very welcome!

Ah, I’m glad you asked that follow-up question. The guideline for a minimum of 10 observations is for continuous independent variables. It didn’t click with me that gender is, of course, categorical. Unfortunately, it’s a bit more complicated for categorical variables because it depends on the number of groups and how they’re split between the groups. Most requirements for categorical variables assume an even split. I’m forgetting some of the specific guidelines for categorical variables, but I’d guestimate that you’d need an additional 20 observations to add the gender variable with half men (10)/half women (10). If you have unequal group sizes, you’d ensure that the smaller group exceeds the threshold.

In the other post I recommended, I include a reference that discusses this in more detail. I don’t have it on hand, but it might provide additional information about it. Also, given the complexity of your design, I’d be sure to run it by your statisticians to be sure.

Finally, these guidelines are for the minimums. And you’d rather not be right at the minimums if at all possible!

Hi Jim!

Thank you for the straightforward blog posts explaining these concepts.

I have a question about interaction tests.

I am designing an experiment and deciding on which test to use. The study is essentially a biomechanical test pre and post a treatment, so for checking treatment effects on the outputs (which are continuous) I will use a paired t-test. However, I also want to check the effects of sex and menstrual cycle phase. For the menstruating females, they are tested at 3 phases in the cycle. Another group of oral birth control users is also tested at 3 times across the cycle.

Now, one statistician recommended just putting everything in a linear mixed effects model (sex, menstrual cycle phase, birth control). Another one suggested doing the sex comparison separate and testing the change due to treatment between the groups, and then to test menstrual cycle effects comparing change due to fatigue in pairwise tests between phases (1 and 2, 2 and 3 etc) and also comparing between birth control and non birth control users, which ends up being a lot of separate tests.

I was also thinking I could instead test sex effect on the treatment outcome with an ANOVA with interaction (sex x treatment), using the average values for the females (since they are tested 3 times), and then for the menstrual cycle effects check phase x treatment x birth control, or phase x treatment first, and then do a separate test to compare changes due to treatment between birth control users and non birth control users at each phase (or use a linear mixed effects model only for the females).

Regarding the interaction tests, someone raised the issue of increased sample size being needed (https://statmodeling.stat.columbia.edu/2018/03/15/need-16-times-sample-size-estimate-interaction-estimate-main-effect/).

But another person mentioned that if I would do all the separate tests between phases etc then this would be an issue with multiple testing and would need correction. Which I assume would also then require a higher sample size for the same power and effect size, correct?

I am very new to stats so any help is much appreciated, especially explaining pros and cons of these approaches. I am also not sure whether, regardless of the tests for sex and phase, I should do a separate t-test for the primary outcome (pre vs post treatment) first and whether I need to correct p values for this or only for the subsequent tests.

Thank you!

Hi Eva,

As for the general approach, it sounds like you have a fairly complex experiment. My sense is to include as much as you can in a single model and not perform separate tests.

Instead of a paired t-test, use the change in the outcome as your dependent variable. Then include all the various other IVs in the model. This approach improves upon a t-test because you’re still assessing the changes in the outcome, just like a paired t-test, but you’re also accounting for addition sources of variability in the model, which the paired t-test can’t do. If there’s a chance that the treatment works better in women or men, then you should include an interaction term.

Again, I would try to avoid separate tests and build them into the model. Based on the rough view of your experiment that I have, I don’t see the need for separate tests. You can perform the multiple comparison tests as needed to do the adjustments for the multiple tests, but I would have the main tests all be part of the model, and the perform the multiple comparison tests based on the model results.

You definitely need a larger sample size when include interaction terms, or any other type of term (main effect, polynomial for curvature, etc.) I’m not sure that I agree with that link you share that you need 16 times the sample size though. (The author proposes a very specific set of assumptions about relative effect sizes and then generalizes from there. Not all interaction effects will fit his specific assumptions.)

Typically, you should increase your sample size by at least 10 for each additional term. Preferably more than that but 10 is a common guideline. And that’s an increase of 10, NOT 10X! Before collecting data, you should consider all the possible complexities that you might want to include in your model and figure out how many terms that entails. From there, you can develop a sense of the minimum sample size you should obtain.

As I show in this post, if interaction effects are present and you don’t account for them in the model, your results can be misleading! However, you don’t want to add them all willy nilly either.

I write about that in my post about overfitting your model, which includes a reference for sample size guidelines.

I probably didn’t answer all your questions, but it seems like a fairly complex experiment. I think many of the answers will depend on subject-area knowledge that I don’t have. But hopefully some of the tips are helpful!

Hi Jim,

I am currently doing my dissertation and am doing a 3 (price difference: no/low/high, within subjects) X 2 (information level: low/high, between groups) mixed ANOVA to assess the affect of price and information on consumers sustainable shopping decisions. I have significant main effects of both price and information, but the interaction was not significant. When interpreting these results, what does the non-significant interaction tell me about the main effects?

Also is there any other possible reason for the non-significant interaction eg narrow sample type etc?

Thank you!

Hi Jim,

Do you have R code by chance?

Amelia

Hi Jim,

Great!! Thank you for the explanation. Very helpful and informative.

Thank you for the this, great explanation.

My question is, do we rely only on the p-value to indicate significance in interactions?

All thanks

Hi Layan,

In terms of saying whether the interaction is statistically significant or not, yes, you go only by the p-value. However, determining whether to include it or not in your model is a different matter.

You should never reply solely on p-values for fitting your model. They definitely play a role but shouldn’t be the sole decider. For more information about that, read my post, Choosing the Correct Regression Model.

That definitely applies to interaction terms. If you get a significant p-value and the interaction makes theoretical sense, leave it in. However, if it’s significant but runs counter to theory, you should consider excluding it despite the statistical significance. And if the interaction is not significant but theory and/or other studies strongly suggest it should be included, you can include it regardless of the p-value. Just be sure to explain your approach and reasoning in the write-up and include that actual p-value/significance (or not) in your discussion. For example, if it’s not significant but you need to include it for theoretical/other researcher reasons, you’d say something to the effect, “the A*B interaction was not significant in my model, but I’m including it because other research indicates it’s a relevant term.” Then some details about the other research or theory.

I’m not sure if you were asking about only determining significance/nonsignificance or the larger issue of whether to include it in your model, but that’s how to handle both of those questions!

“The other main reason is that when you include the interaction term in the model, it accounts for enough of the variance that the main effect used to account for before you added the interaction, that it is longer significant. If that’s the case, it’s not a problem! It just means that for the main effect in question, that variable’s effect entirely depends on the value of the other variable in the interaction term. None of that variable’s effect is independent of that other variable. That happens sometimes!”

Would this be true if the interaction term was not significant?

Hi Alice,

It can be true. It’s possible that there’s not enough significance to go around, so to speak, leaving both insignificant.

Hi Jim,

Thanks for a great explanation!

What happens if, once you add the interaction term, the main effect is no longer statistically significant, but the interaction term is?

Thank you,

Hania

Hi Hania,

That can happen for a couple of reasons. One, if you have an interaction term that contains two continuous variables, it introduces multicollinearity into the model, which can reduce statistical significance. There’s an easy fix for this problem. Just center your continuous variables, which means you subtract each variable’s mean from all its observed values. I write about this approach and show an example in my post about multicollinearity.

The other main reason is that when you include the interaction term in the model, it accounts for enough of the variance that the main effect used to account for before you added the interaction, that it is longer significant. If that’s the case, it’s not a problem! It just means that for the main effect in question, that variable’s effect entirely depends on the value of the other variable in the interaction term. None of that variable’s effect is independent of that other variable. That happens sometimes!

Thank you Jim, that is really helpful to me!

I am currently on research and in my research I have 3 independent variables (x, y, z) and one dependent variable. after conducting a 3-way ANOVA, I have seen that, all the 3 variables and their interaction are significant. I have no idea what to do next. please help me how to solve this🙏🙏

Dear Jim, thanks for your time, and valuable info . My analysis has result for one of the dimensions impact is statistically insignificant while interaction effect is significant. I was told not right to interpret. Now I see it could be. Can you please send me a source to refer in my thesis? Thx, abd Best Regards

Hi Serap,

My go to reference is Applied Linear Statistical Models by Neter et. al. I haven’t looked to see if it discusses this aspect of interaction effects, but I’d imagine it would in its over 1000 pages!

Yes, when you have significant interaction effects, you need to understand them and incorporate them into your conclusions. Failure to do so can lead you to incorrect conclusions. This is true whether or not your main effects are significant.

Thank you Jim. This is very helpful!

I have one question regarding interaction. Let’s say I have two dichotomous variables A and B. What is the difference between using an interaction term A*B in the model vs. creating a grouping variable that has four levels (A+B+; A+B-; A-B+; A-B-)?

Thanks!

Hi,

In my paper, I have two independent variables (infusion time and amount of lemongrass) which has a significant interaction. I am unsure as to how to explain and support this in words.

Thank you

Hi Jim!!

Thanks for taking your time to answer our questions, I discovered your page today and it’s great!

If you have some time, I would like to ask you about interaction terms in a time series regression, such as an ARDL model. My questions are two.

i) Does an interaction term with two variables, let’s call these X1{t} and X2{t}, need lags in this type of model?

ii) If I have some interaction term like X3{t}=X1{t}*X2{t}, is it necessary to apply a unit root test to check for stationarity, right? In that case, what is the best way to reach it?

Thanks a lot !!!

Hi Jim! Thanks for you post!

How do I report the interaction on the text? Is it correct to say that the codiment has effect on satisfaction only if it interact qith the type of food?

Hi Jim,

How to Automatically judge whether there are interaction terms between independent variables by using a package in R? Is there a way to do this?

As I have 5 categorical independent variables and 4 continuous independent variales. It’s hard for me to check it one by one manually.

Hi Linlin,

I don’t know about R, but various software packages have routines that will try all sort of combinations of variables. However, I do not recommend the use of such automated procedures. That increases your chances for finding chance correlations that are meaningless. Read my post about datamining for more information. Ideally, theory and subject-area knowledge should be guides when specifying your regression model.

Hello Jim,

I got to know about the awesome work you are doing in the statistics field from someone I am following on YouTube and LinkedIn in the Data Analysis and Data Science space(s).

With respect to interactions effects, I have some questions:

(1) should the interaction terms be included in a multiple regression model at all times if they are statistically significant? Or is it the research/study question(s) that should determine their inclusion?

(2) How do you determine the interaction terms to include? For instance, in the second example in this blog of a manufacturing process, I see that you used Pressure and Temperature as the interaction terms.

Finally, I analyzed the data in (2) using Excel. While I obtained the same model output as in your example, the interaction plot I created had parallel lines for high and low pressure respectively, suggesting a lack of interaction. I used this formula

Strength = 0.1210*Temperature*Pressure

to derive fitted/predicted values for the dependent variable along with the high and low values for pressure and the range of values for temperature. Please is there something I did incorrectly?

I wanted to send you an email or post the image here but these have proven difficult.

Hoping to hear from you.

Thank you.

Jefferson

Hi Jefferson,

Sorry about the delay replying! Thanks for writing with the great questions.

In the best case scenario, you add the interaction terms based on theoretical reasons that support there being an interaction effect. You can go “fishing” for a significant interaction term to see if it is significant. But be aware that doing that too much increases your probability of finding chance effects. For more information about that, read my post about data mining. If you do add interaction terms “just to see” and you find a significant one, be sure that it makes logical/theoretical sense.

So, there’s not a hard and fast rule for knowing when to include interaction terms. It’s bit of an art as well as a science. For more details about that aspect, read my post about Model Specification: Choosing the Correct Regression Model. But try to use theory and subject-area knowledge as guides and be sure that the model makes sense in the real-world. You don’t want to be in a situation where the only reason you’re including variables and interactions is just because they’re significant. They have to make sense too.

I’m not sure why your Excel recreation turned out that way. I recreated the graph in Excel myself and got replicated the graph in the post almost exactly. You need to use the entire equation and enter values for all the terms. For the Time variable, I entered the mean time. The Excel version I made is below. Additionally, I’ve added a link to the Excel dataset in the post itself. You can download that and see how I made it.

I hope that helps!

Hi Jim,

I am attempting to explain a three-way interaction.

I examine the effect of X on Y condition on two moderators (W and Z; W and Z are positive values).

I expect that W reduces the negative effect of X on Y; Z strengthens the negative effect of X on Y; and I need to conclude which one has stronger effect on the relationship between X and Y.

Case 1: W and Z are continuous variables, the outcome is:

Y = 0.023 -X(0.941-0.009*W+0.340Z-0.201WZ)

Case 2: W and Z are dummy variables (taking a value of 1 or 0), the outcome is

Y = 0.016 -X(0.967-0.092*1+0.145*1-0.253*1*1) (I replaced W=1 and Z=1)

How I can interpret the three way effect in this case. Could you give me a help?

Thank you

Thank you for your quick response! Really helpful 🙂

Jim,

Thank you so much for your time explaining these concepts! I’m reading medical literature and trying to figure out the difference between a P-interaction and a p-value. This is a very important study with a P-interaction=0.57 so being that it is not <0.05 (statistically significant), I'm thinking P-interaction must be the 3-way interaction rather than the main effects.

Thanks so much!

Becky

Hi Becky,

I don’t know what a P-interaction is? Do you mean a p-value for an interaction term?

P-values for interaction terms work the same way as they do for main effects and I’ve never seen them given a distinct name. When they’re less than your significance level, they indicate that the interaction (or main effect) is statistically significant. More specifically, they’re all testing whether the coefficient for the term (whether it’s for a main effect or interaction effect) equals zero. When your p-value is less than the significance level, the difference between the coefficient and zero is statistically significant. You can reject the null that effect is zero (i.e., rule out no effect). All of that applies to main effects and interaction effects. It’s how you interpret the effect itself that varies.

I hope that helps!

Jim — this is so helpful. I think I get it now. We can imagine a scenario where sleep and study are not correlated. The good students, no matter how much they sleep, still find the time to study. The bad students, no matter how much they sleep, still don’t study much. So sleep hours and study hours are not correlated.

And yet, there can still be an interaction effect between sleep and study. For example, we could imagine that for the students who study a lot, getting extra sleep has a big, positive impact on their GPA. But for the students who study a little, getting extra sleep has only a tiny, positive impact on their GPA.

Thus, there was no correlation between sleep and study, but their interaction was still significant.

Did I get that right?

Hi Max,

Yes, that’s it exactly! There doesn’t need to be a correlation between the IVs for an interaction effect to exist.

Hi Jim, thank you for writing this article!

I was wondering if you could help me with this. I conducted a regression analysis including an interaction between two categorical variables. (Sequel*Book) [yes=1; no=0] on movie performance.

I found a positive significant interaction effect, can I now say that the performance of book on movie performance is based on if it is a sequel or not? And thus suggest that if sequel = 1 this positively affects performance?

Thank you!

Hi Emily,

Yes, if you have that type of interaction and it is statistically significant, you can say that the relationship between Book (Yes/No) and Movie Performance depends on whether it’s a sequel. Using an interaction plot should help bring the relationships to life. From what you write, it sounds like the interaction effect favors movies that are a sequel and based on a book. That combination of characteristics produces a higher performance than you’d expect based on main effects alone.

Hi Jim,

Thank you so much!! I really appreciate you taking the time to answer my questions! If I may, I have another couple of questions that arose when I ran the moderations:

First, I had only one three-way interaction (I*C*E) that was b = .002, p = .049, and I am not sure if I should keep it in. To give more context, R square change = .013, R^2 = .404, F = 3.910, p = .049.

Second, one of the lower order terms I have in my model (C*E) is a product of the two moderators and it was not significant in any of the 15 moderations and it does not contribute to my hypothesis. Should I still retain the C*E when I run this even though it’s not technically something I’m looking at? I was told I didn’t have to, but since it is a lower order term (and of course I, C, E and I*C and I*E are included) that contributes to the I*C*E interaction, I am conflicted on if I should keep it in there.

Also, given that I am running 15 moderations, would you happen to know if I should use Bonferroni to correct the alpha value? I do not want to p-hack my results.

Thank you again!

Dilum

Hi Dilum,

You’re very welcome!

If that’s the change in R-squared due to the three-way interaction term, that’s a very small increase! And, it appears to be borderline significant anyway. It might not be worth including. You can enter values in that equation and the model without the 3-way interaction to see if the predictions change much. If they don’t, it’s more argument to not include the 3-way, unless there are strong theoretical/subject-area reasons to include.

Also, that’s a ton of interaction (moderation) terms. Are they all significant? How many observations do you have? I’m not sure if you said earlier. With so many terms in the model, you have to start worrying about overfitting your model.

Do you have theoretical reasons to include so many interaction terms? Or does it just improve the fit? I don’t think I’ve ever seen a model with so many interactions!

Thank you.

My study is concerned with critical thinking skills measured by the health science reasoning test (hsrt) and the levels of academic performance (measured as A+, A, etc) . The critical thinking skills are divided into 5 subscales. I am after the impact of a single critical thinking skill or a combination of them to the levels of academic performance.

When I used Interaction, I found that there are significant relationship. I used the main effect, the interaction between two up to even 4 critical thinking skills (A*B*C*D) . Am I on the right track?

Thank you very much.

Hi Liza,

You’re very welcome!

One thing you should do is see what similar studies have done. When building a model, theory should be a guide. I write about that in my post about model specification. Typically, you don’t want to add terms only because they’re significant.

However, that’s not to say that you’re going down the wrong path. Just something to be aware of.

If you’re adding these terms, they’re significant, and they make theoretical sense, it sounds like you’re on the right track. Again, read my warning to Dilum about three-way interactions. That would apply to four-way and higher interactions too. They’re going to be very hard to interpret. And they often don’t improve the model by very much. In practice, I don’t typically see more than two-way interactions.

They might make complete sense for research. Just be sure they’re notably improving the fit of the model! Also, with so many interaction terms, you should be centering your continuous variables because you’ll be introducing a ton of multicollinearity in your model. Fortunately, centering the continuous variables is a simple fix for that type of multicollinearity. Read my post about multicollinearity, which also illustrates using centering for interaction terms.

Thank you so much for your reply! Is interaction of THREE IVs against one DV possible?

One more question, if you may. What is the difference between interaction and two-way, between interaction and three-way?

Thank you very much.

Hi Liza,

Yes, a three-way interaction is definitely possible. However, read my very recent reply to Dilum in the comments for this post with cautions about three-way interaction terms!

A three-way interaction is when the relationship between an IV and a DV depends on the values of two other IVs in the model. A two-way interaction is where the relationship between an IV and DV depends on just one other IV in the model.

Hi Jim.

Thank you for your explanation.

So sorry for posting my comment here as i fail to find where to properly comment.

My query goes like this. I am finding out the impact of a single DV or a combination of several DVs and several IVs. Is it safe to assume that i can use GLM interaction?

Thank you very much for your time.

Hi Liza,

For most models, you’ll assess the relationship between one or more IVs on a single DV. There are exceptions but that’s typical.

In that scenario, if you have at least two IVs, yes, you can assess the main effects between each IV and the DV and the interaction effect between two or more IVs and the DV.

As long as you have at least two IVs, you can assess the interaction effect.

Hi Jim,

Thank you so much for this clear explanation. It cleared up a lot of things for me, but I have some questions that arose from my own research project that I am hoping you could answer.

I am running several moderations for a study, where I’m looking at different executive functions as measured by one test. So the DV(s) are the five executive functions, and I also have three IVs that three symptoms of a disorder, and three moderators. My syntax for the model looks something like this, but multiplied by 15 (for the 5 DVs, for each of the three IVs):

IV1 M1 M2 M3 IV1*M1 IV1*M2 IV1*M3 IV1*M1*M3 IV1*M2*M3

My first question is: If I find that the three way interactions are not significant, but I find that one or more of the two way interactions ARE significant, do I drop the 3-way interaction terms from the model and rerun with my 2-way interactions and the predictors/moderators?

My second question is very similar to the above one: If neither the 2- or 3-way interactions are significant, do I drop them from the model and just run a linear regression with my 4 predictors?

My third question follows from both the above questions: Because I am testing 5 IVs from the same scale, and I have (technically) 15 models to run, if I drop any interaction term from any one of the models, do I have to drop them from the other 14? Is it okay to present some results where I only had 7 terms in the model and some where I had 4?

Thank you so much!

Hi Dilum,

Yes, analysts will typically remove interaction terms (and other terms) when they are not significant. The exception would be if you have strong theoretical reasons for leaving a term in even though it is not significant.

There is an exception involving interaction terms but it works the other way than what you describe. If you have a significant two-way interaction (X1*X2) but one or both of the main effects X1 X2 are not significant, you’d leave those insignificant effects in the model. The same goes for a three-way interaction. If that’s significant, you’d include the relevant main effects and two-way terms in the model even when they’re not significant. It allows the model to better estimate the interaction effects. The general rule is to include the lower order terms when the higher order terms are significant. Specifically, it’s the lower-order terms that comprise the significant higher-order term.

To summarize, if the high-order term is not significant, it’s usually fine to remove it. If a higher-order term is significant and one or more of the lower-order terms in it are not significant, leave them in.

As for three-way interaction terms, if you include those, be sure they notably increase the model’s fit. And, I don’t mean just that they’re significant but make a real practical difference. Three-way interaction terms are notoriously hard to interpret. When they’re significant, they often are improving the model by much. So, be sure that they’re really helping. If you really need them, include them in the model. I don’t see them used much in practice though. And, check to see what similar studies have done.

The answer to your last question really depends on the subject area and what theory/other studies say. Generally speaking, you don’t need to include the same IVs in different models. The results in one model don’t necessarily affect what you include in the other models. However, there might be concerns/issues specific to the subject area that state otherwise! While there’s no general rule that says the models must be the same, there could be reasons for your specific scenario. You’ll have to look in to that.

Thanks so much for your thoughtful and quick response, Jim. I truly appreciate it.

I get what you mean about how the process is fictional and just meant to illustrate a point.

It’s interesting to me that a correlation between x and y is not necessary for x*y to be a significant interaction term. I guess is my next question is…why not?

When I tried to explain interaction effects to someone the other day, I gave a different example:

GPA = a*Sleep + b*Study + c*Sleep*Study

I tried to say, “If the impact of study hours on GPA depends on sleep hours, then you have an interaction effect. For example, if study hours only boost GPA when sleep hours are greater than 6, then you have an interaction effect.” (I hope I explained that correctly! Let me know if not.)

The person responded, “Oh, so you mean that there’s a correlation between sleep and study?”

I can see why the person asked that question, and I’m not sure I have an intuitive explanation for why the answer to their question is, “No, not necessarily.”

I imagine these things are tricky to explain, and I hope I’m not taking us too far down a rabbit hole. Anyways, thanks again for your time!

Hi Max,

I have heard that confusion about interaction effects and the being correlated several times, so it’s definitely a common misconception!

First, let’s look at it conceptually. A two-way interaction means that the relationship between X and Y changes based on the value of Z. Y is the DV. X and Z are the IVs. There’s really no reason why a correlation between X and Z must be present to affect the relationship between X and Y. It’s just not a condition for it to happen.

Now, let’s look at this using your GPA example. Imagine that each person is essentially a relatively good or poor student and studies accordingly. Better students study more. Poor students study less. A bit of variation but that’s the tendency. Now, imagine that a good student happens to sleep longer than usual. Being a good student, they’ll still study a longer amount of time despite having less awake time to do it. Alternatively, imagine that a poor student happens to sleep less than usual. Despite having more awake hours in the day, they’re not going to study more. Hence, there’s no correlation between hours sleeping and hours studying. Despite this lack of correlation, the model is saying that the interaction effect is significant, which means that the relationship between Studying and GPA depends on Sleep (or the relationship between Sleep and GPA depends on Studying) even though Sleep and Study are not correlated.

Basically, the presence of these two conditions affect the relationship between the IV and DV even when the IVs aren’t correlated. And, ideally, you don’t want IVs to be correlated. That’s known as multicollinearity and excessive amounts of it can cause problems!

Hi Jim! Thanks for the helpful post. I have some thoughts and questions about interaction effects…

– What I really like about the condiments example is that it’s extremely intuitive.

– Might you have an example for continuous variables that is equally intuitive?

– Or maybe you could say a bit more about the pressure and temperature example. You described it as a “manufacturing process.” What might we be manufacturing there? (I know the math is the same no matter what, but if I have an intuitive understanding of the “real-world scenario,” it helps me grasp things better.)

And now, my big picture questions…

Is a correlation between “x” and “y” a necessary condition for an interaction effect of x*y?

So, in the current example, does the fact that there’s an interaction effect of pressure*temperature imply that there is a correlation between pressure and temperature?

Could you ever have an interaction effect of x*y without a correlation between “x” and “y”?

Thank you so much for your time and public service!

Hi Max,

The manufacturing process uses continuous variables in the interaction. The process is fictional, but you don’t really need to know it to understand what the interaction term is telling you. Pretend it’s a widget making process. Temperature and pressure are variables in the production process. You can set these variables to higher/lower temperatures and pressures. Perhaps it’s the temperature of the widget plastic and pressure it is injected into the mold. You get the idea. The idea is that these are variables the producer can set for their process.

The continuous interaction is telling you that the relationship between manufacturing temperature and the strength of the widgets changes depending on the pressure the process uses. If you use high pressure in the process, then increasing the temperature causes the mean strength to increase. However, if you use a lower pressure during manufacturing, then increasing the temperature causes the mean strength to drop.

A correlation is NOT necessary to have significant interaction terms. You can definitely have a significant interaction when the variables in it are not correlated with each other. Those are separate properties. In the example, temperature and pressure are not necessarily correlated. You can actually check by downloading the data and assessing their correlation. I haven’t checked that specifically so I don’t know offhand.

Thank you, Jim. I appreciate the time you have taken to create these wonderful posts.

You’re very welcome, Maura. I’m so happy to hear that they’ve been helpful!

hello jim,

In my Study to understand the effect of a therapy program onmemory function, I got significant main effects(within subject) but insignificant interaction effects(time* Therapy outcome). how can I interpret this?

Hi Ranil,

I’m not sure what you’re variables are. You indicate and interaction with time and the therapy outcome, but the interaction term will contain two independent variables and not an outcome variable.

However, in general, when you have significant main effects and the interaction effects are not significant, you know that there is a relationship between the IVs and the DV. Your data suggest those relationships exist (thanks to the significant main effects) and the relationships between each IV and the DV do not change based on the value of the other variable(s) in your interaction term because the interaction is not significant.

I hope that helps!

Hi Jim,

Would you probe the simple slopes if an interaction in the regression model was not significant? I probed it anyways and saw that one of the simple slopes was significant. Im not sure what this means.

Thanks so much!

Hi Erica,

If an interaction term is not significant, I’d consider removing it from the model and, as you say, assess the slopes for the main effects. The exception is if you have strong theoretical reasons to include an interaction term. Then you might include it despite the lack of significance. But, generally, you’d remove the interaction term.

Hi Jim,

Many thanks for the blog. I have recently purchased your Introduction to statistics book and also your regression book. Really like the way you explain difficult concepts. While I have understood the concept of “Interaction effects”, I am getting a different result while running the regression on the data for Interactions categorical. The methodology I have used is as follows :

1) Dependent Variable – Enjoyment.

2) 1st IV- Food – ( 1-Hotdog, 0-Icecream)

3) 2nd IV- Condiment- (0-Mustard, 1-Choclate Sauce)

4) 3rd IV- Food * Condiment ( Gives a value of 1 for Hotdog* Choclate Sauce and 0 for others).

Then run the regression on excel.

Output

Coefficients Standard Error t Stat P-value

Intercept 61.30891335 1.119532646 54.76295272 7.91E-63

Food 28.29677385 1.583258251 17.87249416 6.13E-29

Condiment 31.73918268 1.583258251 20.04675021 4.51E-32

Food*Condiment -56.02825797 2.239065292 -25.0230568 1.95E-38

The output is quite different from your output. Could you help me understand the difference or am I using incorrect methodology ?

Also, the interaction plot for enjoyment (Food* Condiment) where the line crosses each other seems difficult to plot on excel. Would be helpful if you could write a blog how such interaction plots can be done on excel.

Thanks a lot !!

Animesh

Hi again Jim,

So far I have read your book about regression and it is a great source of practical knowledge. Highly recommended for everyone who needs to run multiple regression.

This is where I learnt about checking for interactions.

I need some help to interpret my findings. I centralised my predictors and not the dependent variable. While the dependent variable and one predictor are the totals from validated scales, the other predictor was treated as continuous data but data is recorded on 1-5 (strongly disagree-strongly agree) Likert but not validated scale. This predictor when centralised did not come with the exact 0 mean but 0.0022. Is this something I should worry about?

Also the regression model with the interaction is overall significant but the interaction coefficient is not significant, p=.31. How should I interpret it. Does it mean that there is no interaction?

I wondered if you could also help please with a practical question regarding results write up. Would you present correlations and descriptive statistics of the analytical sample or regression sample after the residual outlier was removed?

Thank you in advance.

Tom

Hi Tom,

I’m so glad the regression book was helpful!

That slight difference from zero isn’t anything to worry about. It sounds like you’re interaction is not significant. Unless you have strong theoretical reasons for including it, I’d consider removing it from the model.

For the write up, I’d discuss the significant regression coefficients and what they mean. Do the signs and magnitudes of the coefficients match theory? You should discuss the outlier, explain why you removed it, and whether removing it changed the results much.

I hope that helps!

Hi Jim,

Hope you have been doing well! Thank you such extremely informative and easy to comprehend posts! They truly have clarified many statistics concepts for me!

I had a quick question regarding the confidence intervals (CIs) for interaction terms. Let’s consider the following situation:

The interaction term is made of a binary variable (B) and a continuous variable (M). Each of these have their own 95% CIs. My model appears as such:

Y= 2.2 – 0.2M + 0.1B+ ((0.12)M*B ) where B = 0 or B = 1.

I am interested in the beta coefficient in front of M and so, when I reduce this equation, I get:

Y = 2.2 -0.2M when B= 0 and

Y= 2.3 – 0.32 M when B= 1.

This was all well and good as now I can talk about how B being 0 or 1 can effect the outcome Y, but can I obtain the 95% CI for these two new equations’ beta coefficients? Simply using the lower once and then the upper 95% CI of the beta coefficients for M, B and their interaction term and doing the same math does and does not make sense to me. I hope you can guide me through this a bit.

I am using SAS, so it would be great if you could also guide with the code somehow (if there is one)!

Thanks!

Hi Neha,

The good news is that yes it is entirely possible to get the CI for that coefficient. Unfortunately, I’m don’t use SAS and can’t make a recommendation for how to have it calculate the CI. The CI will give you a range of likely values for the difference between the two slopes for M. Because your interaction effect is significant (I’ll assume at the 0.05 level), the 95% CI will exclude zero as a likely value.

Hi Jim, this was a great article. Thank you! I had a question. Are there some regression models in which effect measure modification may be present but the interaction term does not indicate presence of interaction?

Hi Patty,

I’m not entirely sure what you’re asking. But, if you have a significant interaction term in your model, then an interaction effect is present.

The only way that an interaction effect wouldn’t be present in that case is if your model is somehow biased/incorrect. Perhaps you’ve done one of the following: misspecified the model, omitted a confounding variable, or overfit the model. But then you can’t necessarily trust any part of your model.

However, if you specified the correct model and your interaction term is significant, you have an interaction effect.

Dear Jim,

Thank you for your precise and concise discussion on interaction terms in regression analysis. Please keep up the good work. I would like to know whether time series regression specifications can have interaction terms. I am trying to investigate the effect of interacting two macroeconomic variables in one country over a period of time. Thank you for your time.

Hi Eunice,

Thanks so much! Your kind words made my day!

Yes, you can definitely include interaction terms in time series regression.

Thank you! I really appreciate this website. 🙂

Jim,

In Chapter 5 of your ebook (great book by the way…worth every penny), you couch interaction effects in terms of variables (like A, B, and C above) which was very effective in conveying the concept. I did have a practical technical question however. If A’s main effect with Y is described in terms of a quadratic(Y=const+A+A^2) how do you check the interaction effect on Y along with the second variable B? Is it still simply A*B or should you include the squared term as such A*A^2*B? As a practical example from p82 in your ebook, you were showing the relationship between %Fat and BMI where the relationship was described well by a quadratic (%Fat=const+BMI+BMI^2). To extend that example, lets say that Age is also related to %Fat. How do you check the interaction effect between BMI and Age on %Fat?

Thanks in advance for your clarification,

Mike

Hi Mike,

I’m so glad to hear that my regression book has been helpful!!

That’s a great question. The answer is that the correct model depends on the nature of your data. If you have a quadratic term, A and A^2, you can certainly include one or both of the following interaction terms: A*B and A^2*B.

Again, it could be one, both, or neither. You have to see what works for your model while incorporating theory and other studies. Interpreting the coefficients becomes even more challenging! However, the interaction plots I highly recommend will show you the big picture. Logically, if a polynomial term includes an interaction, the lines on the interaction plot will be curvilinear rather than straight.

So, yes, it’s perfectly acceptable to add those types of interaction terms.

Hi Jim! Thanks for the great article. I have one question: I am doing multivariate logistic regression with 7 covariates. I would like to test if there is interaction between two of those variables. Should I check for interaction in the FULLY adjusted model? Or in a model that includes only the two variables and their interaction term? Thanks!

Hi Irena,

Typically, I’d include the interaction in the model with all the relevant variables rather than just the two variables. You want to estimate the interaction effect while accounting for the effects of all the other relevant variables. If you leave some variables out, it can bias the interaction effect.

Hi Jim,

First, thank you much for providing this kind of easy written information for people like me who are are statisticians.

My question is, I have 6 quantitative independent variables to do regression on a dependent quantitative variable. When I regress each independent variable on dependent variable, separately, I find every independent variable statistically very significant (p-values very less than 0.05, the max value is 0.004, rest are 0.000). When I do a multiple linear regression including all independent variables altogether, I find two of the independent variables statistically insignificant. I know that this situation arises due to interaction term and multicollinearity. Given the situation, should I drop the two non significant independent variables from the multiple regression model, while they were significant in the individual simple regression models. In same context, I find support from literature that these variables (two variables who got insignificant p-value in multiple regression) do affect the dependent variable. You answer in terms of keeping of dropping these variables will be appreciated. Thank you

Hi Muhammad,

If your model has an interaction term, you’re correct that it creates some multicollinearity. Fortunately, there is a simple fix for that. Just center your continuous variables. Read my post about multicollinearity to see that in action! Multicollinearity can reduce your statistical power, potentially eliminating the significance of your variables.

Definitely check into that because that could be a factor. There’s even a chance that you could restore their significance. And, in general, you should assess multicollinearity even outside the interaction effect. The post I link to shows you how to do all of that.