A least squares regression line represents the relationship between variables in a scatterplot. The procedure fits the line to the data points in a way that minimizes the sum of the squared vertical distances between the line and the points. It is also known as a line of best fit or a trend line.

In the example below, we could look at the data points and attempt to draw a line by hand that minimizes the overall distance between the line and points while ensuring about the same number of points are above and below the line.

That’s a tall order, particularly with larger datasets! And subjectivity creeps in.

Instead, we can use least squares regression to mathematically find the best possible line and its equation. I’ll show you those later in this post.

In this post, I’ll define a least squares regression line, explain how they work, and work through an example of finding that line by using the least squares formula.

What is a Least Squares Regression Line?

Least squares regression lines are a specific type of model that analysts frequently use to display relationships in their data. Statisticians call it “least squares” because it minimizes the residual sum of squares. Let’s unpack what that means!

The Importance of Residuals

Residuals are the differences between the observed data values and the least squares regression line. The line represents the model’s predictions. Hence, a residual is the difference between the observed value and the model’s predicted value. There is one residual per data point, and they collectively indicate the degree to which the model is wrong.

To calculate the residual mathematically, it’s simple subtraction.

Residual = Observed value – Model value.

Or, equivalently:

y – ŷ

Where ŷ is the regression model’s predicted value of y.

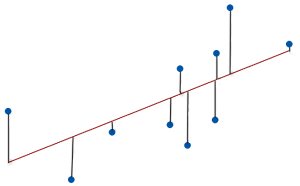

Graphically, residuals are the vertical distances between the observed values and the line, as shown in the image below. The lines that connect the data points to the regression line represent the residuals. These distances represent the values of the residuals. Data points above the line have positive residuals, while those below are negative.

The best models have data points close to the line, producing small absolute residuals.

Minimizing the Squared Error

Residuals represent the error in a least squares model. You want to minimize the total error because it means that the data points are collectively as close to the model’s values as possible.

Before minimizing error, you first need to quantify it.

Unfortunately, you can’t just sum the residuals to represent the total error because the positive and negative values will cancel each other out even when they tend to be relatively large.

Instead, least squares regression takes those residuals and squares them, so they’re always positive. In this manner, the process can add them up without canceling each other. Statisticians refer to squared residuals as squared errors and their total as the sum of squared errors (SSE), shown below mathematically.

SSE = Σ(y – ŷ)²

Σ represents a sum. In this case, it’s the sum of all residuals squared. You’ll see a lot of sums in the least squares line formula section!

For a given dataset, the least squares regression line produces the smallest SSE compared to all other possible lines—hence, “least squares”!

Least Squares Regression Line Example

Imagine we have a list of people’s study hours and test scores. In the scatterplot, we can see a positive relationship exists between study time and test scores. Statistical software can display the least squares regression line and its equation.

From the discussion above, we know that this line minimizes the squared distance between the line and the data points. It’s impossible to draw a different line that fits these data better! Great!

But how does the least squares regression procedure find the line’s equation? We’ll do that in the next section using these example data!

How to Find a Least Squares Regression Line

The regression output produces an equation for the best fitting-line. So, how do you find a least squares regression line?

First, I’ll cover the formulas and then use them to work through our example dataset.

Least Squares Regression Line Formulas

For starters, the following equation represents the best fitting regression line:

y = b + mx

Where:

- y is the dependent variable.

- x is the independent variable.

- b is the y-intercept.

- m is the slope of the line.

The slope represents the mean change in the dependent variable for a one-unit change in the independent variable.

You might recognize this equation as the slope-intercept form of a linear equation from algebra. For a refresher, read my post: Slope-Intercept Form: A Guide.

We need to calculate the values of m and b to find the equation for the best-fitting line.

Here are the least squares regression line formulas for the slope (m) and intercept (b):

Where:

- Σ represents a sum.

- N is the number of observations.

You must find the slope first because you need to enter that value into the formula for calculating the intercept.

Worked Example

Let’s take the data from the hours of studying example. We’ll use the least squares regression line formulas to find the slope and constant for our model.

To start, we need to calculate the following sums: Σx, Σy, Σx2, and the Σxy. We need these sums for the formulas. I’ve calculated the sums, as shown below. Download the Excel file that contains the dataset and calculations: Least Squares Regression Line example.

Next, we’ll plug those sums into the slope formula.

Now that we have the slope (m), we can find the y-intercept (b) for the line.

Let’s plug the slope and intercept values in the least squares regression line equation:

y = 11.329 + 1.0616x

This linear equation matches the one that the software displays on the graph. We can use this equation to make predictions. For example, if we want to predict the score for studying 5 hours, we simply plug x = 5 into the equation:

y = 11.329 + 1.0616 * 5 = 16.637

Therefore, the model predicts that people studying for 5 hours will have an average test score of 16.637.

Learn how to assess the following least squares regression line output:

- Linear Regression Equation Explained

- Regression Coefficients and their P-values

- Assessing R-squared for Goodness-of-Fit

For accurate results, the least squares regression line must satisfy various assumptions. Read the following posts to learn how to assess these assumptions:

its wonderful. Very well explained..

Amazing sir , no words for the explanation.